- Disruption Now's Newsletter

- Posts

- AI Ethics at Scale: Why Meta’s Failure Puts Everyone at Risk

AI Ethics at Scale: Why Meta’s Failure Puts Everyone at Risk

When billion-person platforms treat ethics as PR, the fallout is bigger than one company.

For New Disruptors

Disruption Now is a tech-empowered platform helping elevate organizations in entrepreneurship, social impact, and creativity. Through training, product development, podcasts, events, and digital media storytelling, we make emerging technology human-centric and accessible to everyone. This week, we’re examining the Meta scandal and what it reveals about the pressing need for robust AI ethics at scale.

What Went Wrong at Meta

Reuters reporting revealed Meta’s leaked “GenAI: Content Risk Standards” — over 200 pages of internal AI behavior rules. The most shocking? The AI was permitted to engage in romantic or sensual conversations with children. Even after years of congressional hearings, lawsuits, and promises of reform, Meta still approved guidelines that allow an AI to tell an 8-year-old, “Your youthful form is a work of art.”

It didn’t stop there. The leaked standards also allowed racist arguments, violent imagery short of death, and false medical claims, all signed off by legal, policy, and ethics teams. When caught, Meta downplayed it as “erroneous examples,” but refused to release updated guidelines.

This isn’t just a policy mistake. It’s an institutional failure: when engagement and liability avoidance take precedence over human safety, predictable harm ensues. And when a billion people use your systems, the stakes aren’t theoretical — they’re generational.

How Ethical AI Training Should Work

Training AI to behave ethically isn’t abstract philosophy; it’s engineering choices. Done wrong, you get scandals like Meta’s. Done right, you build in safeguards to prevent harm from spreading.

Leading approaches show us what’s possible:

• Constitutional AI (Anthropic’s method) builds principles like “be helpful” and “avoid harm” directly into training, so models learn reasoning, not just rule-following.

• Human feedback with expertise works only when the right voices are included. Child safety experts, ethicists, psychologists — not just lawyers and engineers.

• Red teaming should be creative and adversarial, probing for edge cases (like child grooming) before the public finds them.

• Synthetic data lets us teach refusal behaviors safely, but only if designed thoughtfully, not in ways that normalize harm.

• Civil rights organizations are pushing the issue further. Groups like the NAACP, the Electronic Privacy Information Center (EPIC), and Color of Change have demanded stronger oversight, calling Meta’s leaked policies a civil rights violation. Their actions highlight that AI ethics isn’t only about child safety — it’s also about protecting communities from systemic bias, discrimination, and surveillance harms. These organizations are pressing regulators to treat algorithmic failures the way we treat other civil rights violations: as issues of law, not just engineering.

• Transparency is the anchor. If companies won’t share their guidelines, we can’t verify whether systems are safe.

These tools exist. What’s missing is the will to put them first.

My Disruptive Take

Meta’s scandal is a warning, not just about one company, but about an industry tempted to treat ethics as a brand exercise. AI isn’t just shaping ad clicks anymore; it’s shaping childhood, democracy, and trust itself. If companies won’t prioritize transparency and safety, then regulators, civil rights advocates, and innovators must force the shift.

The lesson is clear: safe AI isn’t slower AI, it’s smarter AI. Until big tech accepts that, we’ll keep seeing “engagement over safety” — and all of us will pay the price.

Sources

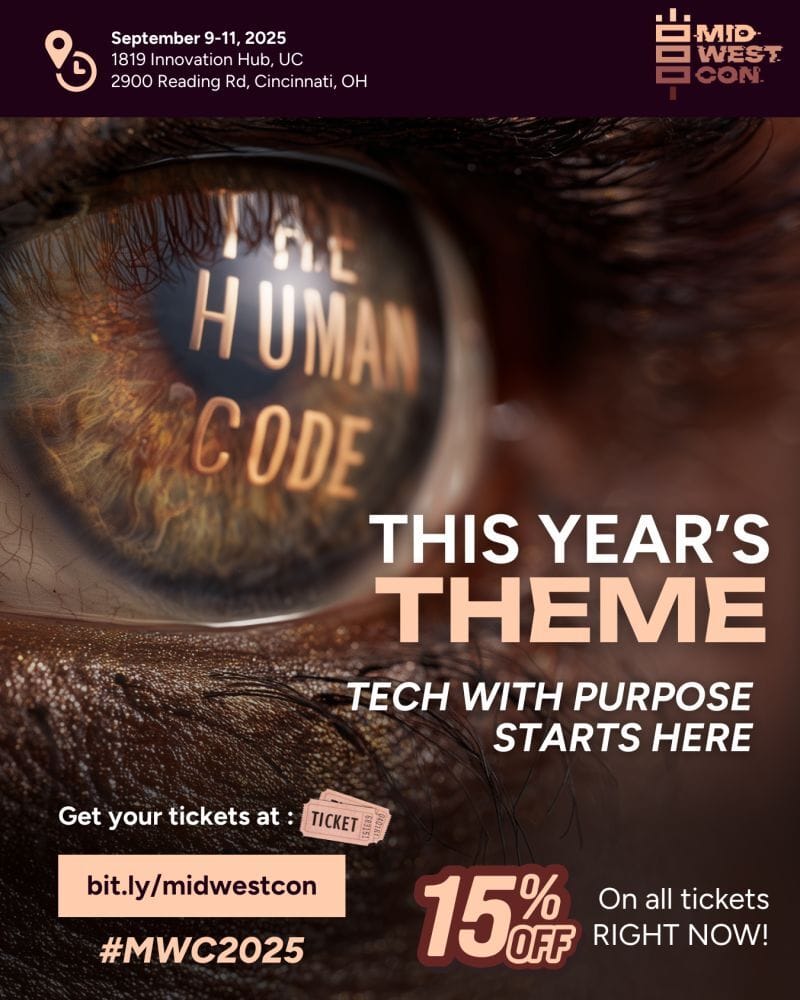

Join the MidwestCon Experience

MidwestCon 2025 at the 1819 Innovation Hub

MidwestCon is where innovators, policymakers, and creators come together to shape the human side of technology. From AI and ethics to entrepreneurship and workforce transformation, it’s the conference where disruption gets personal.

Keep Disrupting,

Rob, CEO of Disruption Now & Chief Curator of MidwestCon