- Disruption Now's Newsletter

- Posts

- Apple Says AI Can’t Reason? Let’s Talk About That...

Apple Says AI Can’t Reason? Let’s Talk About That...

Table of Contents

This New Apple Paper? Big Claim, Even Bigger Spin

Apple just released a new paper called The Illusion of Thinking—and the headline is loud: AI can’t really reason.

Sounds dramatic, right? But here’s the catch—they left out a lot. On purpose.

To make a long story bearable, the actual results in the paper don’t match the headline. But Apple knows most people won’t read past that headline—and that’s the point. This paper feels more like a viral marketing play than a transparent research effort.

So let’s break it down and talk about what they said, what they didn’t say, and why it matters.

What Apple Got Wrong—or Just Didn’t Mention

1️⃣🧩The Tests Were Real, But Narrow

Apple tested models like OpenAI’s o3, Claude 3.5, and DeepSeek-R1 using logic puzzles like the Tower of Hanoi and River Crossing. In those setups, yes—models struggled more as problems got harder.

But here’s the thing: that’s not the same as saying AI can’t reason. It simply means that AI can struggle with certain puzzles that become increasingly complex. But saying “AI can’t reason” based on that is like saying a car can’t drive because it doesn’t fly.

2️⃣🤷♂️No Human Comparison

You know what’s missing from this paper? Us.

There’s no comparison to how humans would do with the same puzzles. Perhaps these tasks are simply too challenging for anyone. That context matters—but it’s completely absent from the research.

3️⃣🌍This Isn’t the Real World

Apple’s paper focuses on synthetic logic tasks, not on how AI performs in real-world use—like writing, tutoring, or coding help.

In the wild, we utilize AI to solve practical problems, often with a human verifying the output. That’s not what Apple tested. So sure, it failed some puzzles—but it’s not clear that tells us much about actual AI reasoning where it counts.

4️⃣🛠️They Didn’t Let AI Use Tools

Another weird choice: Apple didn’t test these models using any tools—no scratchpad memory, no calculator functions, no plugins.

In practice, these are exactly the things that boost AI reasoning. So again, we’re not getting the full picture here.

5️⃣📅And Let’s Be Real About the Timing

This paper dropped right before WWDC—Apple’s big developer event. Coincidence? Probably not.

They’re behind in the AI race. They know it. And this paper lets them say: “See? AI doesn’t really work that well anyway.” It’s a setup to shape the narrative before they try to sell their version of AI.

💥 My Disruptive Take

This paper isn’t meaningless—but it’s definitely not the whole story. It’s Apple throwing a sharp elbow in a crowded AI race. They’re trying to steer the conversation before their own products launch.

Still, the paper does highlight something important:

We should explore where AI breaks down. Knowing the limits helps us build better systems.

And we need more than just headlines—we need serious research dollars and experiments that push AI to its edge in real-world contexts. Not just puzzles, but actual applications where reasoning shows up in messy, human ways.

Here’s an idea: instead of only pointing out the cracks, Apple could put some of those trillion-dollar resources toward figuring out how to patch them. Let’s invest in understanding—not just undermining—how AI can reason better, and how it can help us do the same.

Don’t fall for the headline hype. Keep asking better questions. Keep pushing for deeper understanding. And as always—

🧠 Sources & Citations

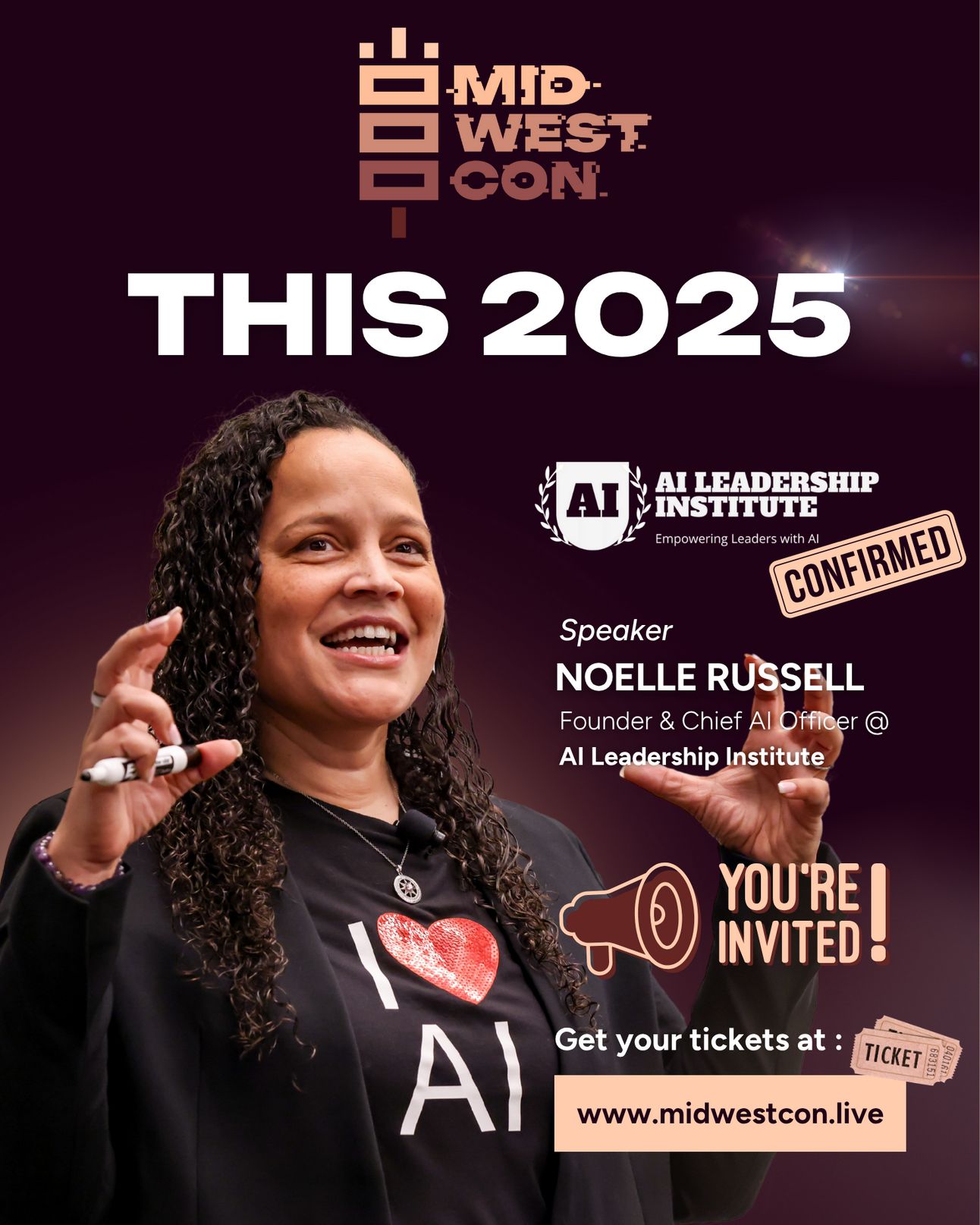

🫱🏻🫲🏻Meet NOELLE RUSSELL at MidwestCon 2025

Noelle Russell is a multi-award-winning technologist and entrepreneur specializing in artificial intelligence and emerging technologies. She has led innovative teams at NPR, Microsoft, IBM, AWS, and Amazon Alexa, with a focus on data, cloud, and AI solutions. As the founder of the AI Leadership Institute, Noelle is dedicated to promoting AI literacy and responsible AI adoption across industries. Her work has earned her recognition as a Microsoft Most Valuable Professional in Artificial Intelligence and VentureBeat’s Women in AI Responsibility and Ethics award. At MidwestCon, Noelle will discuss the ethical implications of AI and strategies for integrating AI responsibly into business practices.

🎧 The Disruption Now Podcast

In a world overflowing with data, innovation, and AI breakthroughs—there’s one essential human skill we seem to be forgetting:

The power to truly see each other.

Not through algorithms or analytics, but through empathy and intention.

In our latest episode of the Disruption Now Podcast, we talk with Eric Brown—a man whose life was lit by one simple yet profound experience: being seen. That single moment of recognition shaped his future in ways he could never have predicted.

Here’s the real call to action for builders, creators, and innovators: you’re not merely making products—you’re designing experiences that influence thought, reshape culture, and establish the next set of norms in this AI age.

Keep Disrupting My Friends,

Rob, CEO of Disruption Now & Chief Curator of MidwestCon

Learn AI in 5 minutes a day

What’s the secret to staying ahead of the curve in the world of AI? Information. Luckily, you can join 1,000,000+ early adopters reading The Rundown AI — the free newsletter that makes you smarter on AI with just a 5-minute read per day.