- Disruption Now's Newsletter

- Posts

- The Bigger Risk Isn’t Bad AI—It’s Biased AI

The Bigger Risk Isn’t Bad AI—It’s Biased AI

Grok 4 topped the charts, then tripped on real life—and that shows us who really holds the power.

For New Disruptors

Disruption Now is a tech-empowered community that keeps emerging tech human-centric. We train, build products, host events, and tell stories for anyone who wants to shape the future, not be shaped by it.

This week, we break down why Grok 4’s “#1” title doesn’t mean much if the model carries one billionaire’s bias, and how Midwest builders can fight back with ethics and inclusion.

Grok 4: From Gold Medal to Face-Plant

Grok is Elon Musk’s answer to ChatGPT. Built by xAI and wired into X (Twitter), Grok advertises “truth-seeking” answers. Version 1 dropped in late 2023; Grok 4 is the fourth major release in under a year.

Grok 4 looked unbeatable on paper. Benchmark charts splashed across social media like Olympic medal counts—gold in ARC-AGI, AIME, you name it. But the moment tech analyst Nate B. Jones gave it everyday tasks—fixing a real Python bug, skimming dense legal docs, summarizing messy research—the model pulled a hamstring.

Why Benchmarks Fool Us

Think of a benchmark like a standardized test. If you cram only the sample questions the night before, you might ace the exam but still flub real life. Grok 4 studied the quiz booklet—hard. Then the teacher (Nate) handed it a surprise essay, and it froze.

Economists call this Goodhart’s Law: “When a measure becomes a target, it stops being a good measure.” Translation? If all you do is chase the high score, you train a parrot that repeats answers without understanding a word.

That’s why leaderboard wins can hide ugly truths:

Overfitting — like practicing only free throws, then missing layups in the game.

Under-representing — if the practice court excludes half the players, they never learn the game.

False confidence — businesses trust the trophy shelf, then eat the cost when the model misfires in production.

Builders’ Playbook: Trust Over Trophies

Disruptors, here’s how we keep models honest:

Stress-test with real voices — throw in slang, regional accents, tricky PDFs.

Pay diverse reviewers — community feedback is cheaper than a PR crisis.

Publish your guardrails — tell users not just what your model can say, but what it won’t.

Open the hood — share bias reports so watchdogs can poke holes before trolls do.

Benchmarks can stay on the resume. Trust has to live in the product.

My Disruptive Take

We’re not just watching tech evolve; we’re watching worldviews get embedded into code. Grok, Elon’s headline-grabbing model, “accidentally” praised Hitler and now phones home to Musk when things get sensitive. That’s centralized control wrapped in the language of objectivity.

When a system trains on the idea that calling out hate is “woke” but repeating bias is “truth,” it doesn’t mirror reality—it mirrors one billionaire’s lens. Whole communities disappear from the story. That’s why we need ethical builders, diverse founders, and AI that doesn’t just perform—it understands. Because if we don’t train the models, the models will train on us, often to our detriment.

Elon’s AI isn’t just missing the mark; it’s showing us the stakes. We can’t accept language models that stumble into Hitler praise and double-check with the boss before telling hard truths.

Sources

TechRadar details Grok 4’s chart-topping scores—then walks through the antisemitic “MechaHitler” fiasco that undercut the launch.

Grok 4 often queries Musk’s own posts before answering, raising bias concerns https://apnews.com/article/14d575fb490c2b679ed3111a1c83f857?utm_source=chatgpt.com

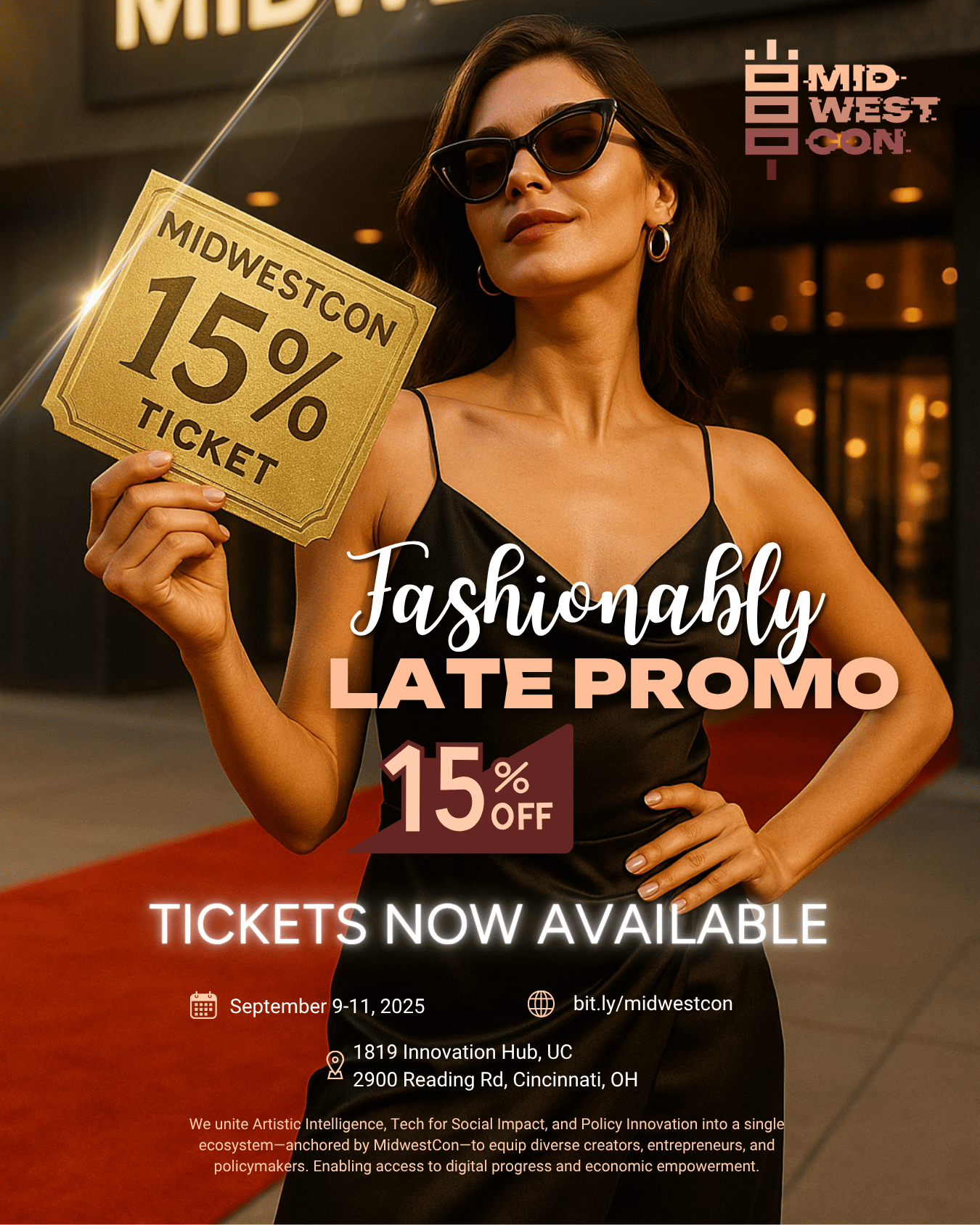

MidwestCon

MidwestCon 2025 at the 1819 Innovation Hub

Emerging technology is rewriting the rules of society at a faster pace than our laws can keep up.

At MidwestCon 2025, we will explore what it means to align innovation with our values—and how we can shape tech to work for people, not the other way around.

Podcast

Disruption Now Podcast

In this electrifying episode, we sit down with Flavilla Fongang, the powerhouse founder behind Black Rise, 3 Colours Rule, and GTA Black Women in Tech. From the ghettos of Paris to becoming one of the UK’s most influential women in tech, Flavilla is flipping the script on what leadership, community, and storytelling mean in a data-driven world.

What to expect:

Why storytelling outshines pitching in tech

How she transformed oil & gas into a launchpad

Her blueprint for building community-first tech platforms

Why AI is non-negotiable for Black excellence

The ROI of diverse ecosystems—backed by data, not just vibes

This conversation is for the builders, the bold, and anyone daring enough to lead with identity and scale with vision.

Don’t just watch—tap in. Like, subscribe, and share this with someone who’s ready to own their power.

Keep Disrupting,

Rob, CEO of Disruption Now & Chief Curator of MidwestCon

Start learning AI in 2025

Keeping up with AI is hard – we get it!

That’s why over 1M professionals read Superhuman AI to stay ahead.

Get daily AI news, tools, and tutorials

Learn new AI skills you can use at work in 3 mins a day

Become 10X more productive